Recommended Updates

Technologies

Technologies

Using PySpark Window Functions for Effective Data Analysis

By Alison Perry / May 04, 2025

Want to dive into PySpark window functions? Learn how to use them for running totals, comparisons, and time-based calculations—without collapsing your data.

Applications

Applications

How to Clean Data Automatically in Python: 6 Tools You Need

By Tessa Rodriguez / Apr 30, 2025

Tired of cleaning messy data by hand? Here's a clear 6-step guide using Python tools to clean, fix, and organize your datasets without starting from scratch every time

Technologies

Technologies

How Aerospike's New Vector Search Capabilities Are Revolutionizing Databases

By Alison Perry / Apr 30, 2025

Aerospike's vector search capabilities deliver real-time scalable AI-powered search within databases for faster, smarter insights

Technologies

Technologies

How New Qlik Integrations are Empowering AI Development with Ready Data

By Tessa Rodriguez / Apr 30, 2025

Discover how Qlik's new integrations provide ready data, accelerating AI development and enhancing machine learning projects

Technologies

Technologies

Exploring the Real Benefits of OrderedDict in Python

By Tessa Rodriguez / Apr 23, 2025

Learn how OrderedDict works, where it beats regular dictionaries, and when you should choose it for better control in Python projects.

Technologies

Technologies

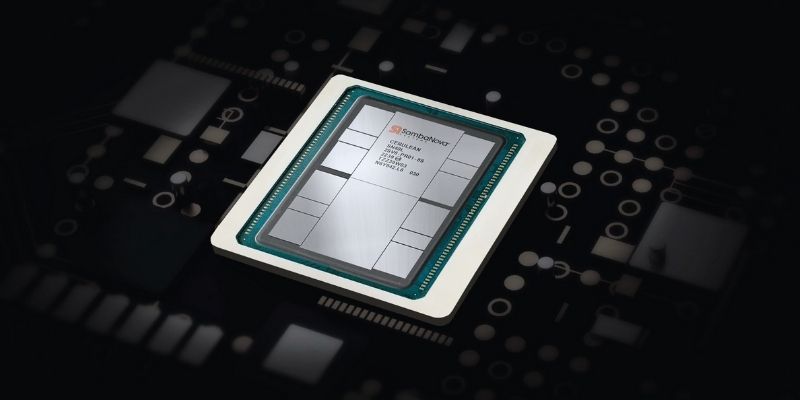

SambaNova AI Launches New Chip: The SN40L Revolutionizes Computing

By Tessa Rodriguez / May 29, 2025

Find SambaNova's SN40L chip, a strong, energy-efficient artificial AI tool designed for speed, scales, and open-source support

Technologies

Technologies

How Google Aims to Boost Productivity with Its New AI Agent Tool

By Tessa Rodriguez / Apr 30, 2025

Google boosts productivity with its new AI Agent tool, which automates tasks and facilitates seamless team collaboration

Technologies

Technologies

How Qlik AutoML Builds User Trust Through Visibility and Simplicity

By Alison Perry / May 07, 2025

Learn how Qlik AutoML's latest update enhances trust, visibility, and simplicity for business users.

Applications

Applications

Copilot vs. Copilot Pro: Key Differences and Upgrade Considerations

By Alison Perry / Apr 28, 2025

Trying to decide between Copilot and Copilot Pro? Find out what sets them apart and whether the Pro version is worth the upgrade for your workflow

Technologies

Technologies

How UDOP and DocumentAI Make Workflows Smarter: A Complete Guide

By Alison Perry / Apr 23, 2025

Struggling with slow document handling? See how Microsoft’s UDOP and Integrated DocumentAI simplify processing, boost accuracy, and cut down daily work

Technologies

Technologies

Understanding Anthropic's New Standard: AI Privacy and Ethical Concerns

By Alison Perry / Apr 30, 2025

Anthropic's new standard reshapes AI privacy and ethics, sparking debate and guiding upcoming regulations for AI development

Technologies

Technologies

Mastering Python's any() and all() for Cleaner Code

By Tessa Rodriguez / May 04, 2025

Struggling with checking conditions in Python? Learn how the any() and all() functions make evaluating lists and logic simpler, cleaner, and more efficient